Kuboard - 优秀的 Kubernetes 管理面板

使用 kubeadm 安装 kubernetes v1.15.3 文档特点 网上那么多 Kubernetes 安装文档,为什么这篇文档更有参考价值?

众多网友验证

每天有超过 200 人参照此文档完成 Kubernetes 安装 不断有网友对安装文档提出改进意见 持续更新和完善

在线答疑

配置要求 对于 Kubernetes 初学者,推荐在阿里云采购如下配置:(您也可以使用自己的虚拟机、私有云等您最容易获得的 Linux 环境)

领取阿里云最高2000元红包(opens new window)

3台 2核4G 的ECS(突发性能实例 t5 ecs.t5-c1m2.large或同等配置,单台约 0.4元/小时,停机时不收费) Cent OS 7.6 安装后的软件版本为

Kubernetes v1.15.3

calico 3.8.2 nginx-ingress 1.5.3 Docker 18.09.7 如果要安装 Kubernetes 历史版本,请参考:

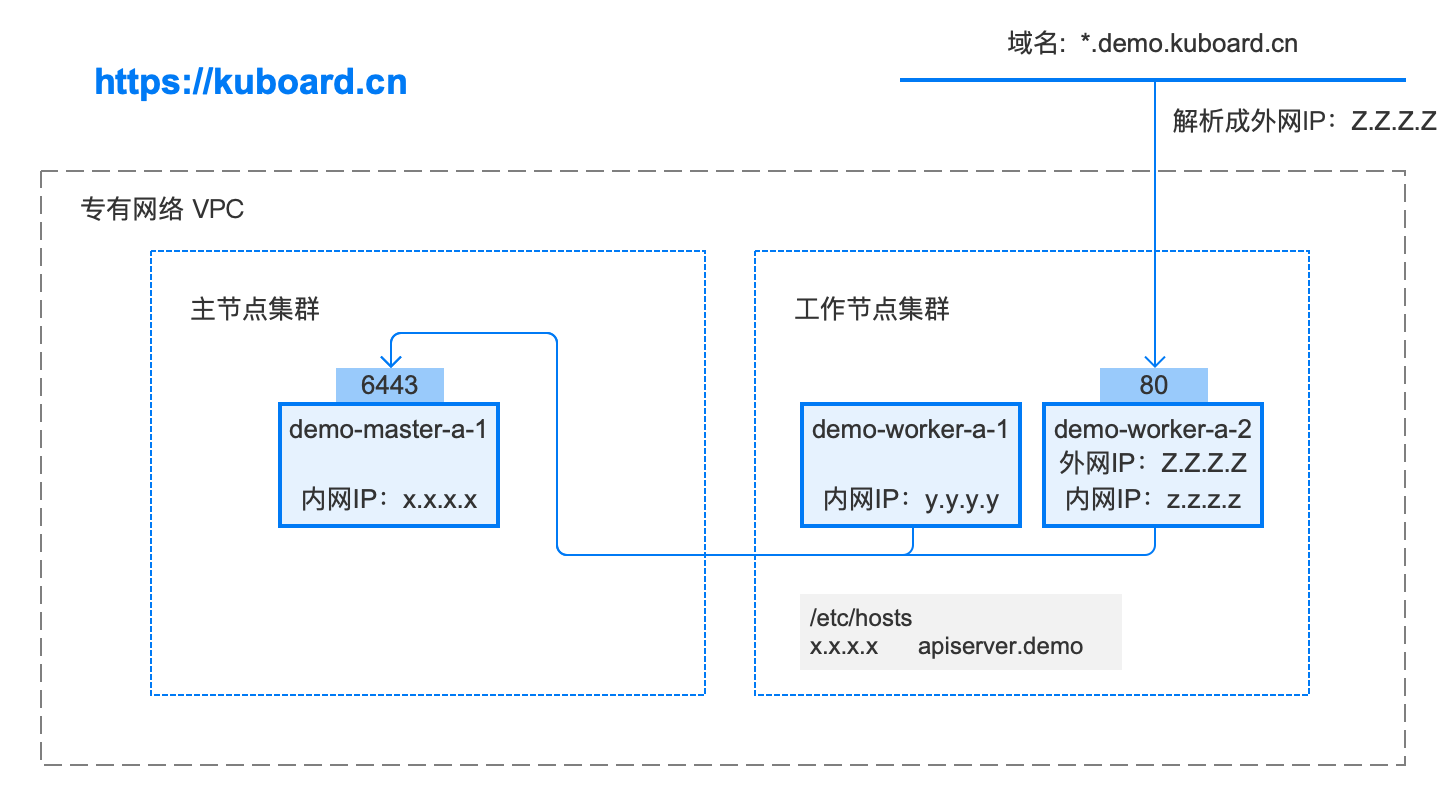

安装后的拓扑图如下:下载拓扑图源文件 使用Axure RP 9.0可打开该文件

TIP

关于二进制安装

鉴于目前已经有比较方便的办法获得 kubernetes 镜像,我将回避 二进制

检查 centos / hostname

cat /etc/redhat-release

hostname

lscpu

1 2 3 4 5 6 7 8 9 10 11

操作系统兼容性

CentOS 版本 本文档是否兼容 备注 7.6 😄 已验证 7.5 😄 已验证 7.4 🤔 待验证 7.3 🤔 待验证 7.2 😞 已证实会出现 kubelet 无法启动的问题

修改 hostname

如果您需要修改 hostname,可执行如下指令:

hostnamectl set-hostname your-new-host-name

hostnamectl status

echo "127.0.0.1 $( hostname ) >> /etc/hosts

1 2 3 4 5 6

安装 docker / kubelet 使用 root 身份在所有节点执行如下代码,以安装软件:

docker nfs-utils kubectl / kubeadm / kubelet

curl -sSL https://kuboard.cn/install-script/v1.15.3/install-kubelet.sh | sh

1 2 3 4

手动执行以下代码,效果与快速安装完全相同。

#!/bin/bash

yum remove -y docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-18.09.7 docker-ce-cli-18.09.7 containerd.io

systemctl enable docker

systemctl start docker

yum install -y nfs-utils

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak | grep -v swap > /etc/fstab

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

sysctl -p

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum remove -y kubelet kubeadm kubectl

yum install -y kubelet-1.15.3 kubeadm-1.15.3 kubectl-1.15.3

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

systemctl daemon-reload

systemctl restart docker

systemctl enable kubelet && systemctl start kubelet

docker version

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97

WARNING

如果此时执行 service status kubelet 命令,将得到 kubelet 启动失败的错误提示,请忽略此错误,因为必须完成后续步骤中 kubeadm init 的操作,kubelet 才能正常启动

初始化 master 节点 TIP

以 root 身份在 demo-master-a-1 机器上执行 初始化 master 节点时,如果因为中间某些步骤的配置出错,想要重新初始化 master 节点,请先执行 kubeadm reset 操作 WARNING

POD_SUBNET 所使用的网段不能与 master节点/worker节点 CIDR 值,如果您对 CIDR 这个概念还不熟悉,请不要修改这个字段的取值 10.100.0.1/20

export MASTER_IP = x.x.x.x

export APISERVER_NAME = apiserver.demo

export POD_SUBNET = 10.100 .0.1/20

echo "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hosts

curl -sSL https://kuboard.cn/install-script/v1.15.3/init-master.sh | sh

1 2 3 4 5 6 7 8 9 10

export MASTER_IP = x.x.x.x

export APISERVER_NAME = apiserver.demo

export POD_SUBNET = 10.100 .0.1/20

echo "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hosts

1 2 3 4 5 6 7 8 9

#!/bin/bash

rm -f ./kubeadm-config.yaml

cat << EOF > ./kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.15.3

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "${APISERVER_NAME} :6443"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "${POD_SUBNET} "

dnsDomain: "cluster.local"

EOF

kubeadm init --config = kubeadm-config.yaml --upload-certs

rm -rf /root/.kube/

mkdir /root/.kube/

cp -i /etc/kubernetes/admin.conf /root/.kube/config

rm -f calico.yaml

wget https://docs.projectcalico.org/v3.8/manifests/calico.yaml

sed -i "s#192\.168\.0\.0/16#${POD_SUBNET} #" calico.yaml

kubectl apply -f calico.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33

:::

检查 master 初始化结果

watch kubectl get pod -n kube-system -o wide

kubectl get nodes

1 2 3 4 5 6 7

初始化 worker节点 获得 join命令参数 在 master 节点上执行

kubeadm token create --print-join-command

1 2

可获取kubeadm join 命令及参数,如下所示

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

1 2

初始化worker 针对所有的 worker 节点执行

echo "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hosts

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

1 2 3 4 5 6 7

检查初始化结果 在 master 节点上执行

输出结果如下所示:

[ root@demo-master-a-1 ~]

NAME STATUS ROLES AGE VERSION

demo-master-a-1 Ready master 5m3s v1.15.3

demo-worker-a-1 Ready < none> 2m26s v1.15.3

demo-worker-a-2 Ready < none> 3m56s v1.15.3

1 2 3 4 5

移除 worker 节点 WARNING

正常情况下,您无需移除 worker 节点,如果添加到集群出错,您可以移除 worker 节点,再重新尝试添加

在准备移除的 worker 节点上执行

在 master 节点 demo-master-a-1 上执行

kubectl delete node demo-worker-x-x

1 2

TIP

将 demo-worker-x-x 替换为要移除的 worker 节点的名字 worker 节点的名字可以通过在节点 demo-master-a-1 上执行 kubectl get nodes 命令获得 安装 Ingress Controller 在 master 节点上执行

kubectl apply -f https://kuboard.cn/install-script/v1.15.3/nginx-ingress.yaml

1 2

在 master 节点上执行

只在您想选择其他 Ingress Controller 的情况下卸载

kubectl delete -f https://kuboard.cn/install-script/v1.15.3/nginx-ingress.yaml

1 2

apiVersion : v1

kind : Namespace

metadata :

name : nginx- ingress

---

apiVersion : v1

kind : ServiceAccount

metadata :

name : nginx- ingress

namespace : nginx- ingress

---

apiVersion : v1

kind : Secret

metadata :

name : default- server- secret

namespace : nginx- ingress

type : Opaque

data :

tls.crt : LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN2akNDQWFZQ0NRREFPRjl0THNhWFhEQU5CZ2txaGtpRzl3MEJBUXNGQURBaE1SOHdIUVlEVlFRRERCWk8KUjBsT1dFbHVaM0psYzNORGIyNTBjbTlzYkdWeU1CNFhEVEU0TURreE1qRTRNRE16TlZvWERUSXpNRGt4TVRFNApNRE16TlZvd0lURWZNQjBHQTFVRUF3d1dUa2RKVGxoSmJtZHlaWE56UTI5dWRISnZiR3hsY2pDQ0FTSXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUwvN2hIUEtFWGRMdjNyaUM3QlBrMTNpWkt5eTlyQ08KR2xZUXYyK2EzUDF0azIrS3YwVGF5aGRCbDRrcnNUcTZzZm8vWUk1Y2Vhbkw4WGM3U1pyQkVRYm9EN2REbWs1Qgo4eDZLS2xHWU5IWlg0Rm5UZ0VPaStlM2ptTFFxRlBSY1kzVnNPazFFeUZBL0JnWlJVbkNHZUtGeERSN0tQdGhyCmtqSXVuektURXUyaDU4Tlp0S21ScUJHdDEwcTNRYzhZT3ExM2FnbmovUWRjc0ZYYTJnMjB1K1lYZDdoZ3krZksKWk4vVUkxQUQ0YzZyM1lma1ZWUmVHd1lxQVp1WXN2V0RKbW1GNWRwdEMzN011cDBPRUxVTExSakZJOTZXNXIwSAo1TmdPc25NWFJNV1hYVlpiNWRxT3R0SmRtS3FhZ25TZ1JQQVpQN2MwQjFQU2FqYzZjNGZRVXpNQ0F3RUFBVEFOCkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQWpLb2tRdGRPcEsrTzhibWVPc3lySmdJSXJycVFVY2ZOUitjb0hZVUoKdGhrYnhITFMzR3VBTWI5dm15VExPY2xxeC9aYzJPblEwMEJCLzlTb0swcitFZ1U2UlVrRWtWcitTTFA3NTdUWgozZWI4dmdPdEduMS9ienM3bzNBaS9kclkrcUI5Q2k1S3lPc3FHTG1US2xFaUtOYkcyR1ZyTWxjS0ZYQU80YTY3Cklnc1hzYktNbTQwV1U3cG9mcGltU1ZmaXFSdkV5YmN3N0NYODF6cFErUyt1eHRYK2VBZ3V0NHh3VlI5d2IyVXYKelhuZk9HbWhWNThDd1dIQnNKa0kxNXhaa2VUWXdSN0diaEFMSkZUUkk3dkhvQXprTWIzbjAxQjQyWjNrN3RXNQpJUDFmTlpIOFUvOWxiUHNoT21FRFZkdjF5ZytVRVJxbStGSis2R0oxeFJGcGZnPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

tls.key : LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdi91RWM4b1JkMHUvZXVJTHNFK1RYZUprckxMMnNJNGFWaEMvYjVyYy9XMlRiNHEvClJOcktGMEdYaVN1eE9ycXgrajlnamx4NXFjdnhkenRKbXNFUkJ1Z1B0ME9hVGtIekhvb3FVWmcwZGxmZ1dkT0EKUTZMNTdlT1l0Q29VOUZ4amRXdzZUVVRJVUQ4R0JsRlNjSVo0b1hFTkhzbysyR3VTTWk2Zk1wTVM3YUhudzFtMApxWkdvRWEzWFNyZEJ6eGc2clhkcUNlUDlCMXl3VmRyYURiUzc1aGQzdUdETDU4cGszOVFqVUFQaHpxdmRoK1JWClZGNGJCaW9CbTVpeTlZTW1hWVhsMm0wTGZzeTZuUTRRdFFzdEdNVWozcGJtdlFmazJBNnljeGRFeFpkZFZsdmwKMm82MjBsMllxcHFDZEtCRThCay90elFIVTlKcU56cHpoOUJUTXdJREFRQUJBb0lCQVFDZklHbXowOHhRVmorNwpLZnZJUXQwQ0YzR2MxNld6eDhVNml4MHg4Mm15d1kxUUNlL3BzWE9LZlRxT1h1SENyUlp5TnUvZ2IvUUQ4bUFOCmxOMjRZTWl0TWRJODg5TEZoTkp3QU5OODJDeTczckM5bzVvUDlkazAvYzRIbjAzSkVYNzZ5QjgzQm9rR1FvYksKMjhMNk0rdHUzUmFqNjd6Vmc2d2szaEhrU0pXSzBwV1YrSjdrUkRWYmhDYUZhNk5nMUZNRWxhTlozVDhhUUtyQgpDUDNDeEFTdjYxWTk5TEI4KzNXWVFIK3NYaTVGM01pYVNBZ1BkQUk3WEh1dXFET1lvMU5PL0JoSGt1aVg2QnRtCnorNTZud2pZMy8yUytSRmNBc3JMTnIwMDJZZi9oY0IraVlDNzVWYmcydVd6WTY3TWdOTGQ5VW9RU3BDRkYrVm4KM0cyUnhybnhBb0dCQU40U3M0ZVlPU2huMVpQQjdhTUZsY0k2RHR2S2ErTGZTTXFyY2pOZjJlSEpZNnhubmxKdgpGenpGL2RiVWVTbWxSekR0WkdlcXZXaHFISy9iTjIyeWJhOU1WMDlRQ0JFTk5jNmtWajJTVHpUWkJVbEx4QzYrCk93Z0wyZHhKendWelU0VC84ajdHalRUN05BZVpFS2FvRHFyRG5BYWkyaW5oZU1JVWZHRXFGKzJyQW9HQkFOMVAKK0tZL0lsS3RWRzRKSklQNzBjUis3RmpyeXJpY05iWCtQVzUvOXFHaWxnY2grZ3l4b25BWlBpd2NpeDN3QVpGdwpaZC96ZFB2aTBkWEppc1BSZjRMazg5b2pCUmpiRmRmc2l5UmJYbyt3TFU4NUhRU2NGMnN5aUFPaTVBRHdVU0FkCm45YWFweUNweEFkREtERHdObit3ZFhtaTZ0OHRpSFRkK3RoVDhkaVpBb0dCQUt6Wis1bG9OOTBtYlF4VVh5YUwKMjFSUm9tMGJjcndsTmVCaWNFSmlzaEhYa2xpSVVxZ3hSZklNM2hhUVRUcklKZENFaHFsV01aV0xPb2I2NTNyZgo3aFlMSXM1ZUtka3o0aFRVdnpldm9TMHVXcm9CV2xOVHlGanIrSWhKZnZUc0hpOGdsU3FkbXgySkJhZUFVWUNXCndNdlQ4NmNLclNyNkQrZG8wS05FZzFsL0FvR0FlMkFVdHVFbFNqLzBmRzgrV3hHc1RFV1JqclRNUzRSUjhRWXQKeXdjdFA4aDZxTGxKUTRCWGxQU05rMXZLTmtOUkxIb2pZT2pCQTViYjhibXNVU1BlV09NNENoaFJ4QnlHbmR2eAphYkJDRkFwY0IvbEg4d1R0alVZYlN5T294ZGt5OEp0ek90ajJhS0FiZHd6NlArWDZDODhjZmxYVFo5MWpYL3RMCjF3TmRKS2tDZ1lCbyt0UzB5TzJ2SWFmK2UwSkN5TGhzVDQ5cTN3Zis2QWVqWGx2WDJ1VnRYejN5QTZnbXo5aCsKcDNlK2JMRUxwb3B0WFhNdUFRR0xhUkcrYlNNcjR5dERYbE5ZSndUeThXczNKY3dlSTdqZVp2b0ZpbmNvVlVIMwphdmxoTUVCRGYxSjltSDB5cDBwWUNaS2ROdHNvZEZtQktzVEtQMjJhTmtsVVhCS3gyZzR6cFE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

---

kind : ConfigMap

apiVersion : v1

metadata :

name : nginx- config

namespace : nginx- ingress

data :

server-names-hash-bucket-size : "1024"

---

kind : ClusterRole

apiVersion : rbac.authorization.k8s.io/v1beta1

metadata :

name : nginx- ingress

rules :

- apiGroups :

- ""

resources :

- services

- endpoints

verbs :

- get

- list

- watch

- apiGroups :

- ""

resources :

- secrets

verbs :

- get

- list

- watch

- apiGroups :

- ""

resources :

- configmaps

verbs :

- get

- list

- watch

- update

- create

- apiGroups :

- ""

resources :

- pods

verbs :

- list

- apiGroups :

- ""

resources :

- events

verbs :

- create

- patch

- apiGroups :

- extensions

resources :

- ingresses

verbs :

- list

- watch

- get

- apiGroups :

- "extensions"

resources :

- ingresses/status

verbs :

- update

- apiGroups :

- k8s.nginx.org

resources :

- virtualservers

- virtualserverroutes

verbs :

- list

- watch

- get

---

kind : ClusterRoleBinding

apiVersion : rbac.authorization.k8s.io/v1beta1

metadata :

name : nginx- ingress

subjects :

- kind : ServiceAccount

name : nginx- ingress

namespace : nginx- ingress

roleRef :

kind : ClusterRole

name : nginx- ingress

apiGroup : rbac.authorization.k8s.io

---

apiVersion : extensions/v1beta1

kind : DaemonSet

metadata :

name : nginx- ingress

namespace : nginx- ingress

annotations :

prometheus.io/scrape : "true"

prometheus.io/port : "9113"

spec :

selector :

matchLabels :

app : nginx- ingress

template :

metadata :

labels :

app : nginx- ingress

spec :

serviceAccountName : nginx- ingress

containers :

- image : nginx/nginx- ingress: 1.5.3

name : nginx- ingress

ports :

- name : http

containerPort : 80

hostPort : 80

- name : https

containerPort : 443

hostPort : 443

- name : prometheus

containerPort : 9113

env :

- name : POD_NAMESPACE

valueFrom :

fieldRef :

fieldPath : metadata.namespace

- name : POD_NAME

valueFrom :

fieldRef :

fieldPath : metadata.name

args :

- - nginx- configmaps=$(POD_NAMESPACE)/nginx- config

- - default- server- tls- secret=$(POD_NAMESPACE)/default- server- secret

- - enable- prometheus- metrics

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168

配置域名解析

将域名 *.demo.yourdomain.com 解析到 demo-worker-a-2 的 IP 地址 z.z.z.z (也可以是 demo-worker-a-1 的地址 y.y.y.y)

验证配置

在浏览器访问 a.demo.yourdomain.com,将得到 404 NotFound 错误页面

提示

许多初学者在安装 Ingress Controller 时会碰到问题,请不要灰心,可暂时跳过 安装 Ingress Controller Kubernetes 入门 以及 通过互联网访问您的应用程序 这两部分内容后,再来回顾 Ingress Controller 的安装。

下一步 🎉 🎉 🎉

您已经完成了 Kubernetes 集群的安装,下一步请:

安装 Kuboard

安装 Kuboard 之前先

更新时间: 2021-05-28 23:01:23

也可以扫描二维码加群

也可以扫描二维码加群