安装Kubernetes高可用 介绍 kubernetes 安装有多种选择,本文档描述的集群安装具备如下特点:

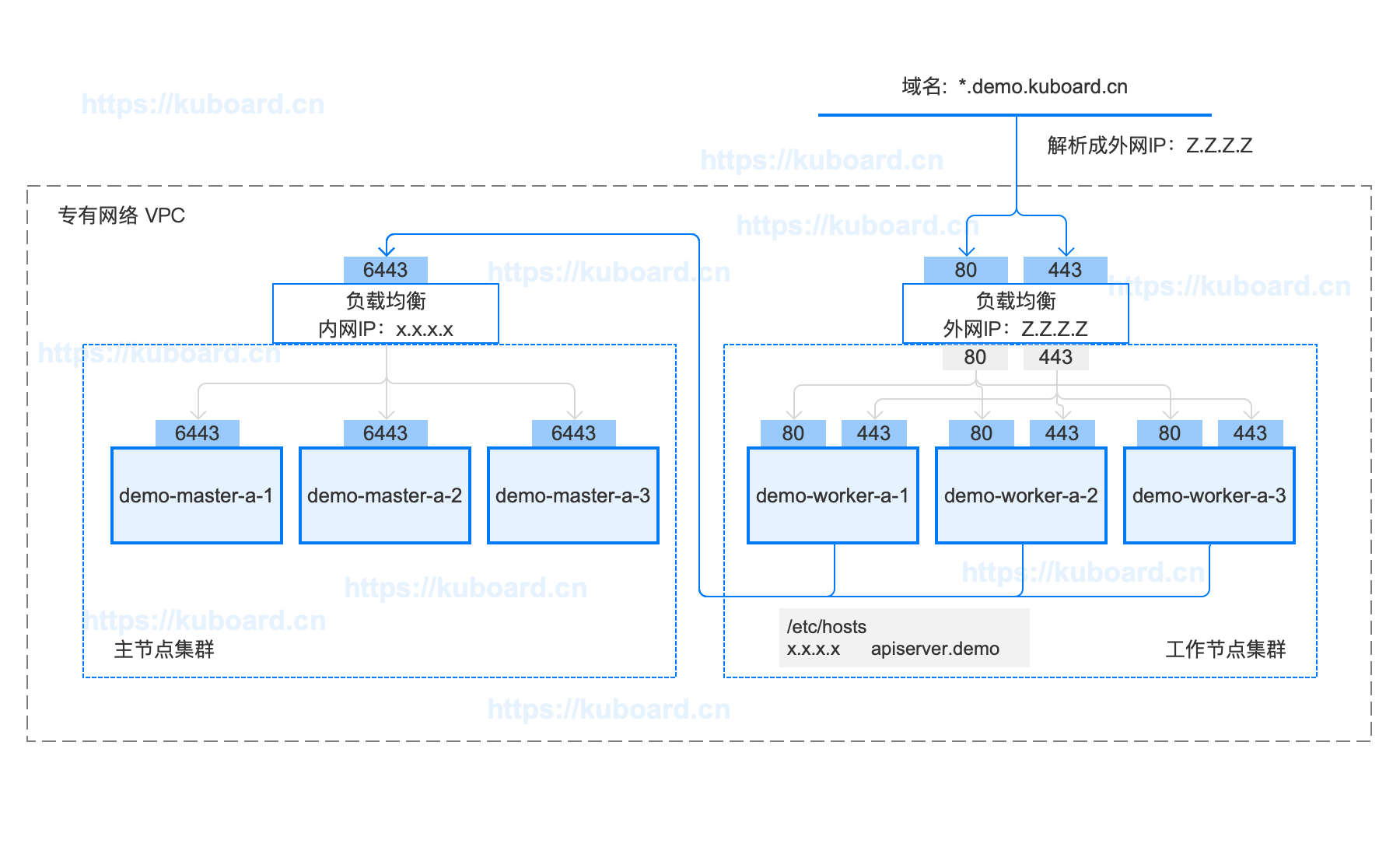

安装后的拓扑图如下:下载拓扑图源文件 使用Axure RP 9.0可打开该文件

在线答疑

检查 centos / hostname

cat /etc/redhat-release

hostname

1 2 3 4 5

操作系统兼容性

CentOS 版本 本文档是否兼容 备注 7.7 😄 已验证 7.6 😄 已验证 7.5 😞 已证实会出现 kubelet 无法启动的问题 7.4 😞 同上 7.3 😞 同上 7.2 😞 同上

修改 hostname

如果您需要修改 hostname,可执行如下指令:

hostnamectl set-hostname your-new-host-name

hostnamectl status

echo "127.0.0.1 $( hostname ) >> /etc/hosts

1 2 3 4 5 6

检查网络 在所有节点执行命令

[root@demo-master-a-1 ~]$ ip route show

default via 172.21.0.1 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

172.21.0.0/20 dev eth0 proto kernel scope link src 172.21.0.12

[root@demo-master-a-1 ~]$ ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:12:a4:1b brd ff:ff:ff:ff:ff:ff

inet 172.17.216.80/20 brd 172.17.223.255 scope global dynamic eth0

valid_lft 305741654sec preferred_lft 305741654sec

1 2 3 4 5 6 7 8 9 10 11 12 13 14

kubelet使用的IP地址

ip route show 命令中,可以知道机器的默认网卡,通常是 eth0,如 default via 172.21.0.23 dev eth0 ip address 命令中,可显示默认网卡的 IP 地址,Kubernetes 将使用此 IP 地址与集群内的其他节点通信,如 172.17.216.80所有节点上 Kubernetes 所使用的 IP 地址必须可以互通(无需 NAT 映射、无安全组或防火墙隔离) 安装 docker / kubelet 请认真核对如下选项,7 个都选中后才能显示如何安装。

再看看我是否符合安装条件 使用 root 身份在所有节点执行如下代码,以安装软件:

docker nfs-utils kubectl / kubeadm / kubelet

curl -sSL https://kuboard.cn/install-script/v1.16.2/install_kubelet.sh | sh

1 2 3 4

手动执行以下代码,效果与快速安装完全相同。

#!/bin/bash

yum remove -y docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-18.09.7 docker-ce-cli-18.09.7 containerd.io

systemctl enable docker

systemctl start docker

yum install -y nfs-utils

yum install -y wget

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak | grep -v swap > /etc/fstab

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

sysctl -p

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum remove -y kubelet kubeadm kubectl

yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

systemctl daemon-reload

systemctl restart docker

systemctl enable kubelet && systemctl start kubelet

docker version

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98

WARNING

如果此时执行 service status kubelet 命令,将得到 kubelet 启动失败的错误提示,请忽略此错误,因为必须完成后续步骤中 kubeadm init 的操作,kubelet 才能正常启动

初始化API Server 创建 ApiServer 的 Load Balancer(私网) 监听端口:6443 / TCP

后端资源组:包含 demo-master-a-1, demo-master-a-2, demo-master-a-3

后端端口:6443

开启 按源地址保持会话

假设完成创建以后,Load Balancer的 ip 地址为 x.x.x.x

根据每个人实际的情况不同,实现 LoadBalancer 的方式不一样,本文不详细阐述如何搭建 LoadBalancer,请读者自行解决,可以考虑的选择有:

nginx haproxy keepalived 云供应商提供的负载均衡产品 初始化第一个master节点 TIP

以 root 身份在 demo-master-a-1 机器上执行 初始化 master 节点时,如果因为中间某些步骤的配置出错,想要重新初始化 master 节点,请先执行 kubeadm reset 操作 关于初始化时用到的环境变量

APISERVER_NAME 不能是 master 的 hostnameAPISERVER_NAME 必须全为小写字母、数字、小数点,不能包含减号POD_SUBNET 所使用的网段不能与 master节点/worker节点 CIDR 值,如果您对 CIDR 这个概念还不熟悉,请不要修改这个字段的取值 10.100.0.1/16在第一个 master 节点 demo-master-a-1 上执行

export APISERVER_NAME = apiserver.demo

export POD_SUBNET = 10.100 .0.1/16

echo "127.0.0.1 ${APISERVER_NAME} " >> /etc/hosts

curl -sSL https://kuboard.cn/install-script/v1.16.2/init_master.sh | sh

1 2 3 4 5 6 7

export APISERVER_NAME = apiserver.demo

export POD_SUBNET = 10.100 .0.1/16

echo "127.0.0.1 ${APISERVER_NAME} " >> /etc/hosts

1 2 3 4 5 6

#!/bin/bash

set -e

if [ ${# POD_SUBNET} -eq 0 ] || [ ${# APISERVER_NAME} -eq 0 ] ; then

echo -e "\033 [31;1m请确保您已经设置了环境变量 POD_SUBNET 和 APISERVER_NAME \033 [0m"

echo 当前POD_SUBNET= $POD_SUBNET

echo 当前APISERVER_NAME= $APISERVER_NAME

exit 1

fi

rm -f ./kubeadm-config.yaml

cat << EOF > ./kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.16.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "${APISERVER_NAME} :6443"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "${POD_SUBNET} "

dnsDomain: "cluster.local"

EOF

kubeadm init --config = kubeadm-config.yaml --upload-certs

rm -rf /root/.kube/

mkdir /root/.kube/

cp -i /etc/kubernetes/admin.conf /root/.kube/config

rm -f calico-3.9.2.yaml

wget https://kuboard.cn/install-script/calico/calico-3.9.2.yaml

sed -i "s#192\.168\.0\.0/16#${POD_SUBNET} #" calico-3.9.2.yaml

kubectl apply -f calico-3.9.2.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44

执行结果

执行结果中:

第15、16、17行,用于初始化第二、三个 master 节点 第25、26行,用于初始化 worker 节点 Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME /.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config

sudo chown $( id -u ) : $( id -g ) $HOME /.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join apiserver.k8s:6443 --token 4z3r2v.2p43g28ons3b475v \

--discovery-token-ca-cert-hash sha256:959569cbaaf0cf3fad744f8bd8b798ea9e11eb1e568c15825355879cf4cdc5d6 \

--control-plane --certificate-key 41a741533a038a936759aff43b5680f0e8c41375614a873ea49fde8944614dd6

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join apiserver.k8s:6443 --token 4z3r2v.2p43g28ons3b475v \

--discovery-token-ca-cert-hash sha256:959569cbaaf0cf3fad744f8bd8b798ea9e11eb1e568c15825355879cf4cdc5d6

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

检查 master 初始化结果

watch kubectl get pod -n kube-system -o wide

kubectl get nodes

1 2 3 4 5 6 7

WARNING

请等到所有容器组(大约9个)全部处于 Running 状态,才进行下一步

初始化第二、三个master节点 获得 master 节点的 join 命令

可以和第一个Master节点一起初始化第二、三个Master节点,也可以从单Master节点调整过来,只需要

增加Master的 LoadBalancer 将所有节点的 /etc/hosts 文件中 apiserver.demo 解析为 LoadBalancer 的地址 添加第二、三个Master节点 初始化 master 节点的 token 有效时间为 2 小时 初始化第一个 master 节点时的输出内容中,第15、16、17行就是用来初始化第二、三个 master 节点的命令,如下所示:此时请不要执行该命令

kubeadm join apiserver.k8s:6443 --token 4z3r2v.2p43g28ons3b475v \

--discovery-token-ca-cert-hash sha256:959569cbaaf0cf3fad744f8bd8b798ea9e11eb1e568c15825355879cf4cdc5d6 \

--control-plane --certificate-key 41a741533a038a936759aff43b5680f0e8c41375614a873ea49fde8944614dd6

1 2 3

获得 certificate key

在 demo-master-a-1 上执行

kubeadm init phase upload-certs --upload-certs

1 2

输出结果如下:

[ root@demo-master-a-1 ~]

W0902 09:05:28.355623 1046 version.go:98] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt" : Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection ( Client.Timeout exceeded while awaiting headers)

W0902 09:05:28.355718 1046 version.go:99] falling back to the local client version: v1.16.2

[ upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[ upload-certs] Using certificate key:

70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c

1 2 3 4 5 6

获得 join 命令

在 demo-master-a-1 上执行

kubeadm token create --print-join-command

1 2

输出结果如下:

[ root@demo-master-a-1 ~]

kubeadm join apiserver.demo:6443 --token bl80xo.hfewon9l5jlpmjft --discovery-token-ca-cert-hash sha256:b4d2bed371fe4603b83e7504051dcfcdebcbdcacd8be27884223c4ccc13059a4

1 2

则,第二、三个 master 节点的 join 命令如下:

命令行中,蓝色部分来自于前面获得的 join 命令,红色部分来自于前面获得的 certificate key kubeadm join apiserver.demo:6443 --token ejwx62.vqwog6il5p83uk7y \ \70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c

初始化第二、三个 master 节点

在 demo-master-b-1 和 demo-master-b-2 机器上执行

export APISERVER_IP = x.x.x.x

export APISERVER_NAME = apiserver.demo

echo "${APISERVER_IP} ${APISERVER_NAME} " >> /etc/hosts

kubeadm join apiserver.demo:6443 --token ejwx62.vqwog6il5p83uk7y \

--discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303 \

--control-plane --certificate-key 70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c

1 2 3 4 5 6 7 8 9 10

常见问题

如果一直停留在 pre-flight 状态,请在第二、三个节点上执行命令检查:

curl -ik https://apiserver.demo:6443/version

1

输出结果应该如下所示

HTTP/1.1 200 OK

Cache-Control: no-cache, private

Content-Type: application/json

Date: Wed, 30 Oct 2019 08:13:39 GMT

Content-Length: 263

{

"major": "1",

"minor": "16",

"gitVersion": "v1.16.2",

"gitCommit": "2bd9643cee5b3b3a5ecbd3af49d09018f0773c77",

"gitTreeState": "clean",

"buildDate": "2019-09-18T14:27:17Z",

"goVersion": "go1.12.9",

"compiler": "gc",

"platform": "linux/amd64"

}

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

否则,请您检查一下您的 Loadbalancer 是否设置正确

检查 master 初始化结果

初始化 worker节点 获得 join命令参数 初始化第一个 master 节点时的输出内容中,第25、26行就是用来初始化 worker 节点的命令,如下所示:此时请不要执行该命令

kubeadm join apiserver.k8s:6443 --token 4z3r2v.2p43g28ons3b475v \

--discovery-token-ca-cert-hash sha256:959569cbaaf0cf3fad744f8bd8b798ea9e11eb1e568c15825355879cf4cdc5d6

1 2

在第一个 master 节点 demo-master-a-1 节点执行

kubeadm token create --print-join-command

1 2

可获取kubeadm join 命令及参数,如下所示

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

1

有效时间

该 token 的有效时间为 2 个小时,2小时内,您可以使用此 token 初始化任意数量的 worker 节点。

初始化worker 针对所有的 worker 节点执行

export MASTER_IP = x.x.x.x

export APISERVER_NAME = apiserver.demo

echo "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hosts

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

1 2 3 4 5 6 7 8 9

检查 worker 初始化结果 在第一个master节点 demo-master-a-1 上执行

移除 worker 节点 WARNING

正常情况下,您无需移除 worker 节点

在准备移除的 worker 节点上执行

在第一个 master 节点 demo-master-a-1 上执行

kubectl delete node demo-worker-x-x

1

将 demo-worker-x-x 替换为要移除的 worker 节点的名字 worker 节点的名字可以通过在第一个 master 节点 demo-master-a-1 上执行 kubectl get nodes 命令获得 安装 Ingress Controller kubernetes支持多种Ingress Controllers (traefic / Kong / Istio / Nginx 等),本文推荐使用 https://github.com/nginxinc/kubernetes-ingress

在 master 节点上执行

kubectl apply -f https://kuboard.cn/install-script/v1.16.2/nginx-ingress.yaml

1 2

apiVersion : v1

kind : Namespace

metadata :

name : nginx- ingress

---

apiVersion : v1

kind : ServiceAccount

metadata :

name : nginx- ingress

namespace : nginx- ingress

---

apiVersion : v1

kind : Secret

metadata :

name : default- server- secret

namespace : nginx- ingress

type : Opaque

data :

tls.crt : LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN2akNDQWFZQ0NRREFPRjl0THNhWFhEQU5CZ2txaGtpRzl3MEJBUXNGQURBaE1SOHdIUVlEVlFRRERCWk8KUjBsT1dFbHVaM0psYzNORGIyNTBjbTlzYkdWeU1CNFhEVEU0TURreE1qRTRNRE16TlZvWERUSXpNRGt4TVRFNApNRE16TlZvd0lURWZNQjBHQTFVRUF3d1dUa2RKVGxoSmJtZHlaWE56UTI5dWRISnZiR3hsY2pDQ0FTSXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUwvN2hIUEtFWGRMdjNyaUM3QlBrMTNpWkt5eTlyQ08KR2xZUXYyK2EzUDF0azIrS3YwVGF5aGRCbDRrcnNUcTZzZm8vWUk1Y2Vhbkw4WGM3U1pyQkVRYm9EN2REbWs1Qgo4eDZLS2xHWU5IWlg0Rm5UZ0VPaStlM2ptTFFxRlBSY1kzVnNPazFFeUZBL0JnWlJVbkNHZUtGeERSN0tQdGhyCmtqSXVuektURXUyaDU4Tlp0S21ScUJHdDEwcTNRYzhZT3ExM2FnbmovUWRjc0ZYYTJnMjB1K1lYZDdoZ3krZksKWk4vVUkxQUQ0YzZyM1lma1ZWUmVHd1lxQVp1WXN2V0RKbW1GNWRwdEMzN011cDBPRUxVTExSakZJOTZXNXIwSAo1TmdPc25NWFJNV1hYVlpiNWRxT3R0SmRtS3FhZ25TZ1JQQVpQN2MwQjFQU2FqYzZjNGZRVXpNQ0F3RUFBVEFOCkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQWpLb2tRdGRPcEsrTzhibWVPc3lySmdJSXJycVFVY2ZOUitjb0hZVUoKdGhrYnhITFMzR3VBTWI5dm15VExPY2xxeC9aYzJPblEwMEJCLzlTb0swcitFZ1U2UlVrRWtWcitTTFA3NTdUWgozZWI4dmdPdEduMS9ienM3bzNBaS9kclkrcUI5Q2k1S3lPc3FHTG1US2xFaUtOYkcyR1ZyTWxjS0ZYQU80YTY3Cklnc1hzYktNbTQwV1U3cG9mcGltU1ZmaXFSdkV5YmN3N0NYODF6cFErUyt1eHRYK2VBZ3V0NHh3VlI5d2IyVXYKelhuZk9HbWhWNThDd1dIQnNKa0kxNXhaa2VUWXdSN0diaEFMSkZUUkk3dkhvQXprTWIzbjAxQjQyWjNrN3RXNQpJUDFmTlpIOFUvOWxiUHNoT21FRFZkdjF5ZytVRVJxbStGSis2R0oxeFJGcGZnPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

tls.key : LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdi91RWM4b1JkMHUvZXVJTHNFK1RYZUprckxMMnNJNGFWaEMvYjVyYy9XMlRiNHEvClJOcktGMEdYaVN1eE9ycXgrajlnamx4NXFjdnhkenRKbXNFUkJ1Z1B0ME9hVGtIekhvb3FVWmcwZGxmZ1dkT0EKUTZMNTdlT1l0Q29VOUZ4amRXdzZUVVRJVUQ4R0JsRlNjSVo0b1hFTkhzbysyR3VTTWk2Zk1wTVM3YUhudzFtMApxWkdvRWEzWFNyZEJ6eGc2clhkcUNlUDlCMXl3VmRyYURiUzc1aGQzdUdETDU4cGszOVFqVUFQaHpxdmRoK1JWClZGNGJCaW9CbTVpeTlZTW1hWVhsMm0wTGZzeTZuUTRRdFFzdEdNVWozcGJtdlFmazJBNnljeGRFeFpkZFZsdmwKMm82MjBsMllxcHFDZEtCRThCay90elFIVTlKcU56cHpoOUJUTXdJREFRQUJBb0lCQVFDZklHbXowOHhRVmorNwpLZnZJUXQwQ0YzR2MxNld6eDhVNml4MHg4Mm15d1kxUUNlL3BzWE9LZlRxT1h1SENyUlp5TnUvZ2IvUUQ4bUFOCmxOMjRZTWl0TWRJODg5TEZoTkp3QU5OODJDeTczckM5bzVvUDlkazAvYzRIbjAzSkVYNzZ5QjgzQm9rR1FvYksKMjhMNk0rdHUzUmFqNjd6Vmc2d2szaEhrU0pXSzBwV1YrSjdrUkRWYmhDYUZhNk5nMUZNRWxhTlozVDhhUUtyQgpDUDNDeEFTdjYxWTk5TEI4KzNXWVFIK3NYaTVGM01pYVNBZ1BkQUk3WEh1dXFET1lvMU5PL0JoSGt1aVg2QnRtCnorNTZud2pZMy8yUytSRmNBc3JMTnIwMDJZZi9oY0IraVlDNzVWYmcydVd6WTY3TWdOTGQ5VW9RU3BDRkYrVm4KM0cyUnhybnhBb0dCQU40U3M0ZVlPU2huMVpQQjdhTUZsY0k2RHR2S2ErTGZTTXFyY2pOZjJlSEpZNnhubmxKdgpGenpGL2RiVWVTbWxSekR0WkdlcXZXaHFISy9iTjIyeWJhOU1WMDlRQ0JFTk5jNmtWajJTVHpUWkJVbEx4QzYrCk93Z0wyZHhKendWelU0VC84ajdHalRUN05BZVpFS2FvRHFyRG5BYWkyaW5oZU1JVWZHRXFGKzJyQW9HQkFOMVAKK0tZL0lsS3RWRzRKSklQNzBjUis3RmpyeXJpY05iWCtQVzUvOXFHaWxnY2grZ3l4b25BWlBpd2NpeDN3QVpGdwpaZC96ZFB2aTBkWEppc1BSZjRMazg5b2pCUmpiRmRmc2l5UmJYbyt3TFU4NUhRU2NGMnN5aUFPaTVBRHdVU0FkCm45YWFweUNweEFkREtERHdObit3ZFhtaTZ0OHRpSFRkK3RoVDhkaVpBb0dCQUt6Wis1bG9OOTBtYlF4VVh5YUwKMjFSUm9tMGJjcndsTmVCaWNFSmlzaEhYa2xpSVVxZ3hSZklNM2hhUVRUcklKZENFaHFsV01aV0xPb2I2NTNyZgo3aFlMSXM1ZUtka3o0aFRVdnpldm9TMHVXcm9CV2xOVHlGanIrSWhKZnZUc0hpOGdsU3FkbXgySkJhZUFVWUNXCndNdlQ4NmNLclNyNkQrZG8wS05FZzFsL0FvR0FlMkFVdHVFbFNqLzBmRzgrV3hHc1RFV1JqclRNUzRSUjhRWXQKeXdjdFA4aDZxTGxKUTRCWGxQU05rMXZLTmtOUkxIb2pZT2pCQTViYjhibXNVU1BlV09NNENoaFJ4QnlHbmR2eAphYkJDRkFwY0IvbEg4d1R0alVZYlN5T294ZGt5OEp0ek90ajJhS0FiZHd6NlArWDZDODhjZmxYVFo5MWpYL3RMCjF3TmRKS2tDZ1lCbyt0UzB5TzJ2SWFmK2UwSkN5TGhzVDQ5cTN3Zis2QWVqWGx2WDJ1VnRYejN5QTZnbXo5aCsKcDNlK2JMRUxwb3B0WFhNdUFRR0xhUkcrYlNNcjR5dERYbE5ZSndUeThXczNKY3dlSTdqZVp2b0ZpbmNvVlVIMwphdmxoTUVCRGYxSjltSDB5cDBwWUNaS2ROdHNvZEZtQktzVEtQMjJhTmtsVVhCS3gyZzR6cFE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

---

kind : ConfigMap

apiVersion : v1

metadata :

name : nginx- config

namespace : nginx- ingress

data :

server-names-hash-bucket-size : "1024"

---

kind : ClusterRole

apiVersion : rbac.authorization.k8s.io/v1beta1

metadata :

name : nginx- ingress

rules :

- apiGroups :

- ""

resources :

- services

- endpoints

verbs :

- get

- list

- watch

- apiGroups :

- ""

resources :

- secrets

verbs :

- get

- list

- watch

- apiGroups :

- ""

resources :

- configmaps

verbs :

- get

- list

- watch

- update

- create

- apiGroups :

- ""

resources :

- pods

verbs :

- list

- apiGroups :

- ""

resources :

- events

verbs :

- create

- patch

- apiGroups :

- extensions

resources :

- ingresses

verbs :

- list

- watch

- get

- apiGroups :

- "extensions"

resources :

- ingresses/status

verbs :

- update

- apiGroups :

- k8s.nginx.org

resources :

- virtualservers

- virtualserverroutes

verbs :

- list

- watch

- get

---

kind : ClusterRoleBinding

apiVersion : rbac.authorization.k8s.io/v1beta1

metadata :

name : nginx- ingress

subjects :

- kind : ServiceAccount

name : nginx- ingress

namespace : nginx- ingress

roleRef :

kind : ClusterRole

name : nginx- ingress

apiGroup : rbac.authorization.k8s.io

---

apiVersion : apps/v1

kind : DaemonSet

metadata :

name : nginx- ingress

namespace : nginx- ingress

annotations :

prometheus.io/scrape : "true"

prometheus.io/port : "9113"

spec :

selector :

matchLabels :

app : nginx- ingress

template :

metadata :

labels :

app : nginx- ingress

spec :

serviceAccountName : nginx- ingress

containers :

- image : nginx/nginx- ingress: 1.5.5

name : nginx- ingress

ports :

- name : http

containerPort : 80

hostPort : 80

- name : https

containerPort : 443

hostPort : 443

- name : prometheus

containerPort : 9113

env :

- name : POD_NAMESPACE

valueFrom :

fieldRef :

fieldPath : metadata.namespace

- name : POD_NAME

valueFrom :

fieldRef :

fieldPath : metadata.name

args :

- - nginx- configmaps=$(POD_NAMESPACE)/nginx- config

- - default- server- tls- secret=$(POD_NAMESPACE)/default- server- secret

- - enable- prometheus- metrics

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168

在 IaaS 层完成如下配置(公网Load Balancer ) 创建负载均衡 Load Balancer:

监听器 1:80 / TCP, SOURCE_ADDRESS 会话保持 服务器资源池 1: demo-worker-x-x 的所有节点的 80端口 监听器 2:443 / TCP, SOURCE_ADDRESS 会话保持 服务器资源池 2: demo-worker-x-x 的所有节点的443端口 假设刚创建的负载均衡 Load Balancer 的 IP 地址为: z.z.z.z

配置域名解析 将域名 *.demo.yourdomain.com 解析到地址负载均衡服务器 的 IP 地址 z.z.z.z

验证配置 在浏览器访问 a.demo.yourdomain.com,将得到 404 NotFound 错误页面

下一步 🎉 🎉 🎉

您已经完成了 Kubernetes 集群的安装,下一步请:

点击此处,给个 GitHub Star(opens new window) 点击此处,给个GitHub Star

这么多人都 star 了呢,怎么能少得了您呢?

安装 Kuboard - 微服务管理界面

获取 Kubernetes 免费教程

更新时间: 2021-04-03 22:11:52